Machines are no longer just tools, they are now partners. In 2025, the relationship between artificial intelligence and hardware has strengthened in a way that only few could have anticipated.

The days when AI was only software running on general-purpose processors are gone. The current computing hardware 2025 has been completely redefined by artificial intelligence.

This has not just upgraded the hardware, but it has completely transformed it for better.

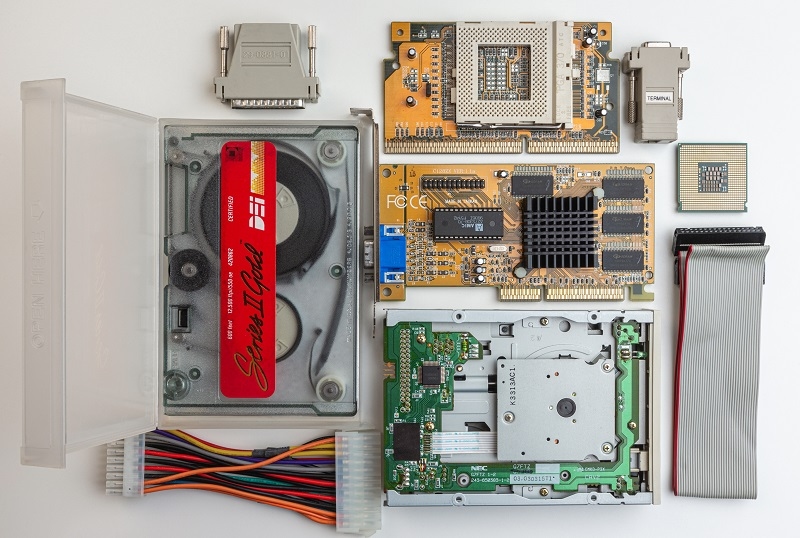

Hardware was first built to support basic tasks like storage, processing and networking. It was all about speed and efficiency, but now those goals have expanded in 2025.

Now, the focus is on adaptability, learning, and real-time response. Hardware is no longer passive, but it is now evolving alongside the algorithms it supports. It is like you do not just turn on a machine, but you wake it up and it learns with you.

At the heart of this evolution is the emergence of Artificial Intelligence chips. These are not just faster processors, but they are specialized and perfectly optimized components that think in patterns, predictions and probabilities.

Why AI Chips Matter More in 2025?

The following factors will help you understand the importance of AI chips:

Designed to handle the complex workloads of training and inference.

Less power is consumed for more performance which is crucial for mobile and embedded systems.

AI chips learn from ongoing data and optimize themselves accordingly.

Thousands of processing cores work simultaneously on separate streams of data to make it rapid.

AI optimizes how the hardware behaves, adjusts, and evolves. It is a feedback loop that keeps refining itself.

Training a machine learning model is not light work. It requires a lot of time and intense computation sessions. This is the reason machine learning hardware is crucial.

It is no longer limited to academic labs or research institutions because this specialized computer hardware is now embedded in everything from personal devices to large-scale infrastructure.

This is not about brute force anymore and it is about smart computation.

In 2025, even consumer-level devices can run models locally that eliminates the need to constantly rely on cloud processing. This can improve the privacy, reduce latency drops, and increase the processing speed.

Here is where things get subtle but significant. Smart hardware integration done directly into the chips, sensors, and system designs. You might not see it, but you will feel the difference.

Take the simple act of unlocking a device, adjusting your car’s route, or controlling your home’s temperature. These are not just ‘smart’ features but they are AI-driven decisions powered by properly integrated hardware.

This level of integration means AI is integrated fully in the hardware and its structures to provide users with the best experience.

One of the most exciting changes in computing hardware 2025 is the shift to adaptive hardware. Systems are being built with dynamic capabilities that are able to change their configurations based on the task or the data stream.

Imagine a chip that reconfigures itself in the middle of task and optimizes its layout based on the activity. This is now a reality now with the latest computing hardware 2025.

These self-optimizing systems are powered by AI and also enable the AI to work better. This is a loop because AI makes the hardware smarter and the hardware boosts AI’s potential.

Edge computing was once a dream, but now it is an everyday reality. Devices at the edge of the network like your phone, your car and your doorbell carry enough intelligence to process AI models without needing a data center.

However, it is crucial to important why these things matter. It is because when AI lives on the edge, everything becomes faster, more private, and less dependent on connectivity.

Therefore, hardware needs to carry more responsibility to make this happen because it has to be lean, fast, and intelligent. This is why machine learning hardware is being included even into low-power chips, just to make sure their speed is optimized and they produce minimal heat.

AI is no longer just a feature but it is most basic thing for us to have a better and safer future.

Just like every good thing, there are some problems as well in computing hardware 2025. This new era of advanced hardware has its own set of challenges:

More computation means the chips will generate more heat.

Smarter hardware can open up newer attack surfaces for scammers and hackers.

AI models vary widely and the hardware needs to adapt quickly.

With the growing demand, power consumption has slowly become a global concern.

These are not just minor problems, but the positive thing is that the industries are evolving fast and learning as it builds.

Hardware is no longer static in 2025 like it was ten years ago, it updates, learns and adapts as per your usage.

In 2025, computing hardware 2025 is not about chasing higher specs anymore. It is about building a system that is ready for intelligence and not just speed.

The lines between software and hardware are blurring with the evolving technology. Systems are now designed by focusing on the future AI infrastructure. This is just the start of a new era where the devices will just not be faster than before, but will work smarter by adjusting, and evolving according to your needs.

This content was created by AI